The AI week: AI music, local LLM browsers, and latest open model releases

When I started this blog I was drawn to the idea of creating a blog about AI, writen by a human. And every post to date has been written by a human - by me! But, my pace of publishing (currently less then one post/week) is unable to keep up with the speed of news and events in AI. So I’ve decied to do a little experiment:

I’ll keep writing how-to’s and techy tutorials, and throughout my week I’ll collect links to interesting and news-worthy stuff in and around AI. And then, I’ll get the robots to write the news.

This is the first such post, written - apart from this bit - entirely by AI. It’s experimental, and I’ll fine tune the process over the coming weeks. And when I’ve got it nailed, I’ll share exactly how I do it here on this blog.

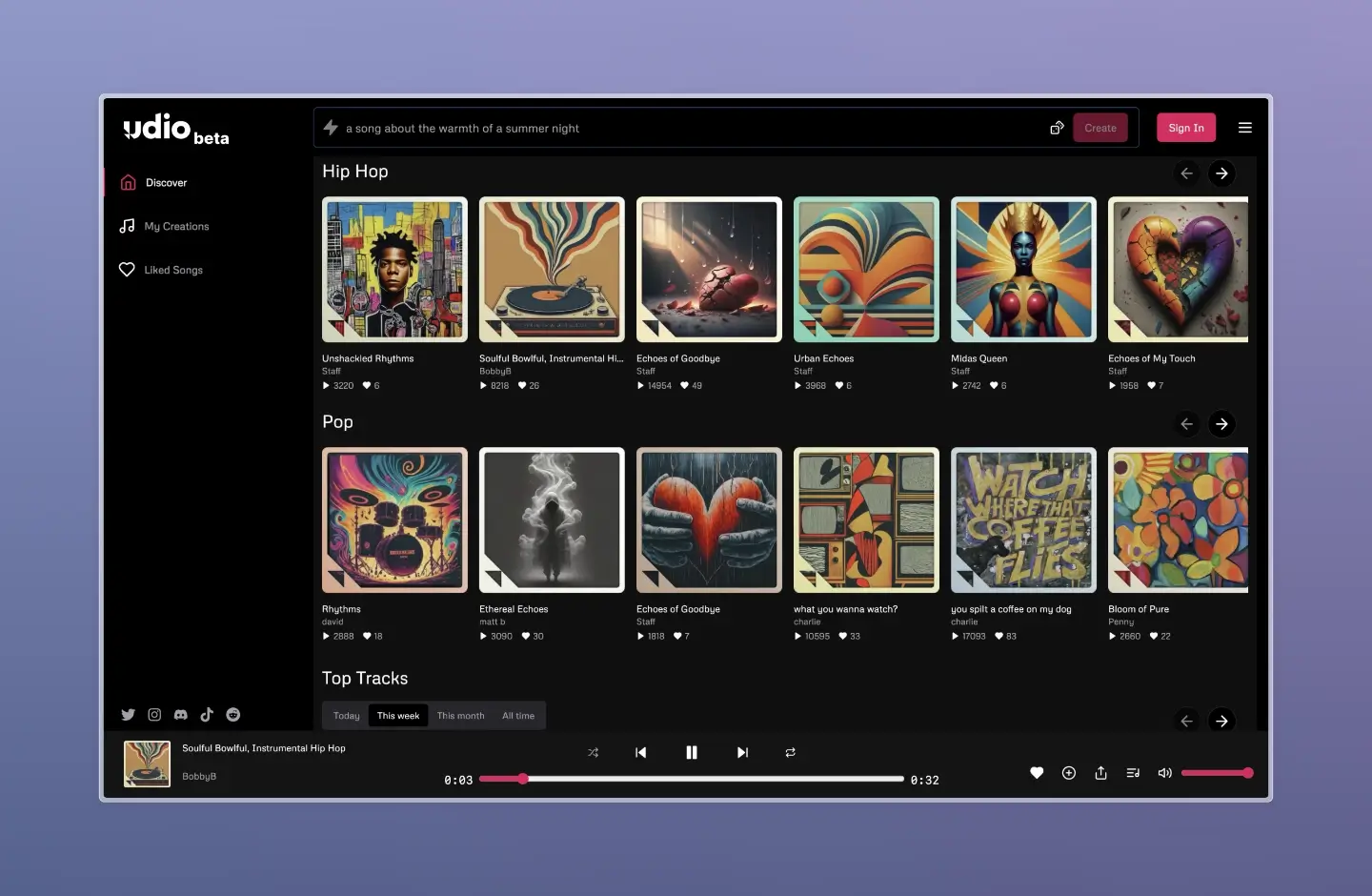

Udio synthesizes music on demand via AI

A new AI music generator called Udio launched this week allowing users to create high-fidelity musical tracks from text prompts. Like other AI music tools such as Suno, Udio can generate music across a variety genres, from hip hop, to country, to classical and everything in between.

Udio uses a two-stage process, first employing a large language model to generate lyrics, then synthesizing the actual audio using a diffusion model. It has built-in filters to block recreations of copyrighted music. It’s an impressive feat, and is set to further heat up the debate around the impact of AI on the wider music and creative industries.

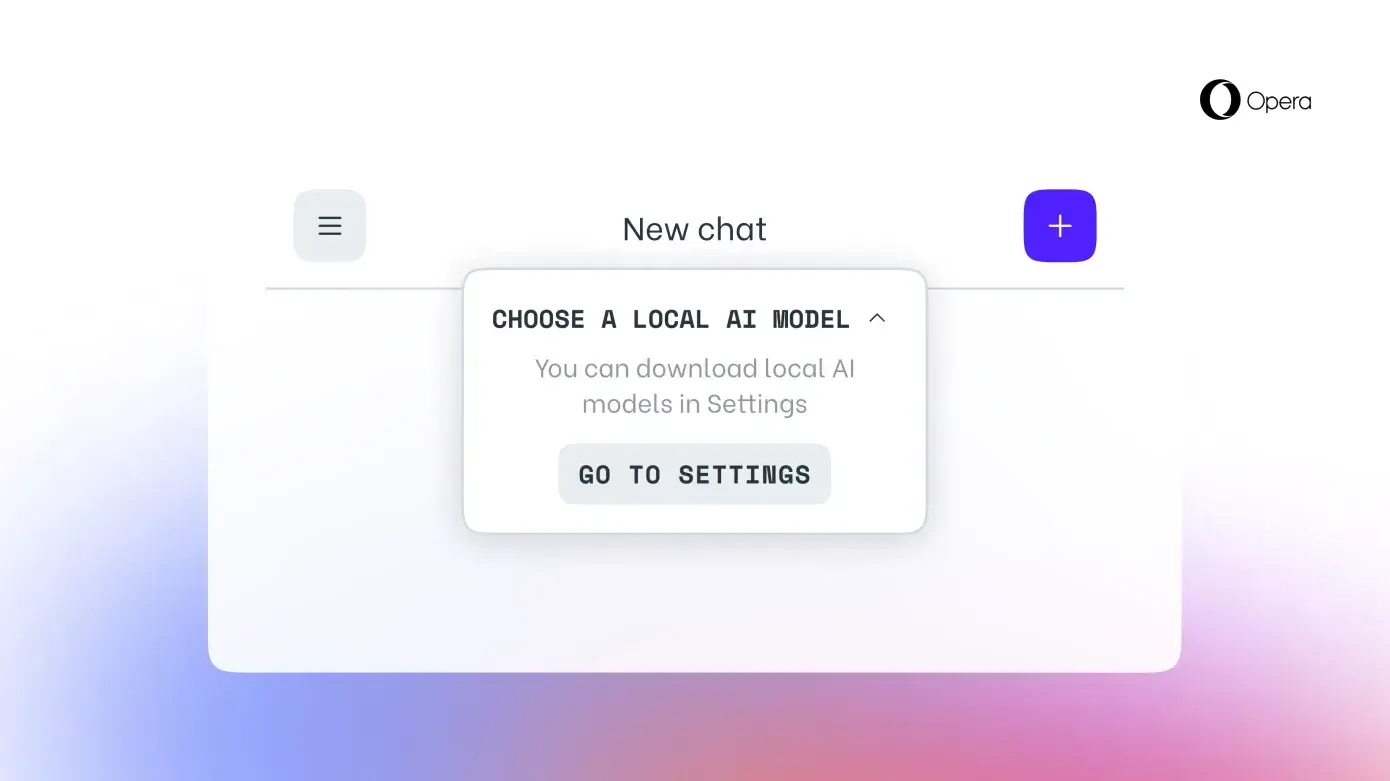

Opera Allowing Local LLM Use

In a significant development, Opera announced it will enable users to download and run over 150 large language models (LLMs) locally in their browser.

This local LLM feature is part of Opera’s AI Feature Drops Program, leveraging the open source Ollama framework to run the models. Running LLMs locally offers Opera’s users data privacy and security as well as the flexibility to find and use models specialised for specifc tasks.

This week’s new open models

A flurry of exiting new open models were announced this week, closing the gap between the proprietry big boys and the open source LLM scene.

MistralAI’s Mixtral 8x22B - A powerful open model

Mixtral 8x22B is MistralAI’s new release, a state-of-the-art open source language model expected to outperform its previous Mixtral 8x7B offering and compete with Claude 3 and GPT-4. Mixtral 8x22B boasts a whopping 176 billion parameters and an impressive 65,000 token context window.

Stability AI releases Stable LM 2 12B

Stability AI unveiled their new 12 billion parameter Stable LM 2 model, available in base and instruction-tuned versions. Trained on seven languages including English and Spanish, it aims to provide efficient multilingual performance.

Cohere’s Command R+: Enterprise grade open LLM

Command R+ is Cohere’s powerful new language model tailored for enterprise use cases. Building on their existing Command R offering, R+ adds advanced retrieval augmentation, multilingual support across 10 languages, and enhanced tool use for workflow automation.

Google expands Gemma with CodeGemma and RecurrentGemma

Google unveiled two additions to its open Gemma model family: CodeGemma for code AI tasks and RecurrentGemma, an efficiency-optimized research model. CodeGemma aims to streamline development workflows via intelligent code completion, generation and chat capabilities.

Meanwhile, RecurrentGemma leverages a new recurrent architecture and local attention mechanism to boost memory efficiency for high throughput research use cases.

Meta’s Llama 3 coming soon… (still)

Meta confirmed plans to release its next-generation open source Llama 3 language model within the next month. Llama 3 will significantly upgrade Meta’s foundational AI for generative assistants.

Expected enhancements include around 140 billion parameters (up from 70B in Llama 2), better handling of complex topics, and overall improved response quality. Meta will roll out multiple Llama 3 versions this year but remains cautious on generative AI beyond text for now.