Claude 3.5 Sonnet takes the AI crown (for now)

Last week, Anthropic announced Claude 3.5 Sonnet, the latest update to their Claude family of models. As is usual with each new state-of-the-art LLM release, the new Sonnet raises the bar across a range of industry evaluations. And, in an unusual shocker for AI announcements, they even released the model for us to play with on the same day!

The AI community on Twitter / X have very quickly reached seeming consensus that the new Sonnet is indeed very good. Perhaps even, a GPT-4 killer?

So, how good is it? Has GPT-4 been dethroned? Let’s take a look.

Understanding Claude 3.5 Sonnet

It doesn’t seem that long ago that Anthropic were announcing Claude 3. In fact, it was literally only three months ago, but this is AI and space-time works a little differently here.

Claude 3 was delivered in three variants, positioned to give users a choice with clear trade-offs. Haiku, the smallest model, prioritised speed and cost. Sonnet, an all-rounder, the medium model that is best for most things. And Opus, the premium model, more intelligent and capable, but slower and more expensive.

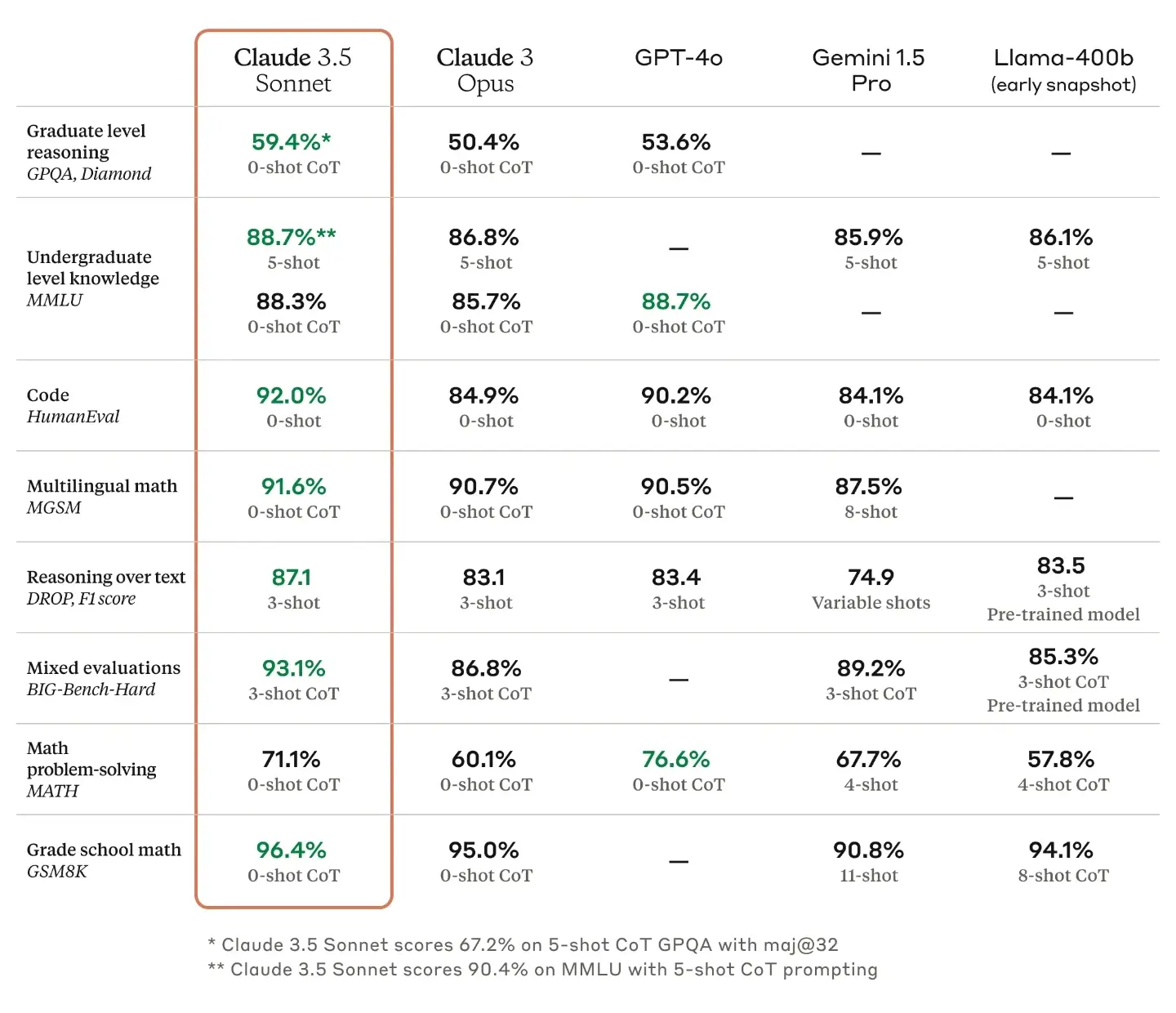

Claude 3.5 Sonnet is the first of the three variants to be upgraded. Anthropic’s own benchmarks show it out-performing Claude 3 Opus, their previously best model, on all measures. It also outcompetes OpenAI’s GPT-4o model on all but two benchmarks.

The fact that the new Sonnet now outperforms the old Opus is interesting as that negates the role of the slower and more expensive Opus. An even more impressive Opus must surely be around the corner, which is a tantalising prospect.

Notably, Sonnet can be used free of charge (with usage limits) through claude.ai - Anthropic’s own consumer facing chat UI. There’s an interesting battle playing out here. OpenAI recently made GPT-4o their standard, free-to-use model, which is also a very good, free model. But Claude 3.5 Sonnet seems to have the edge, and makes claude.ai a very compelling choice for new users wanting to experiment with adopting LLMs as part of their daily lives.

Artifacts

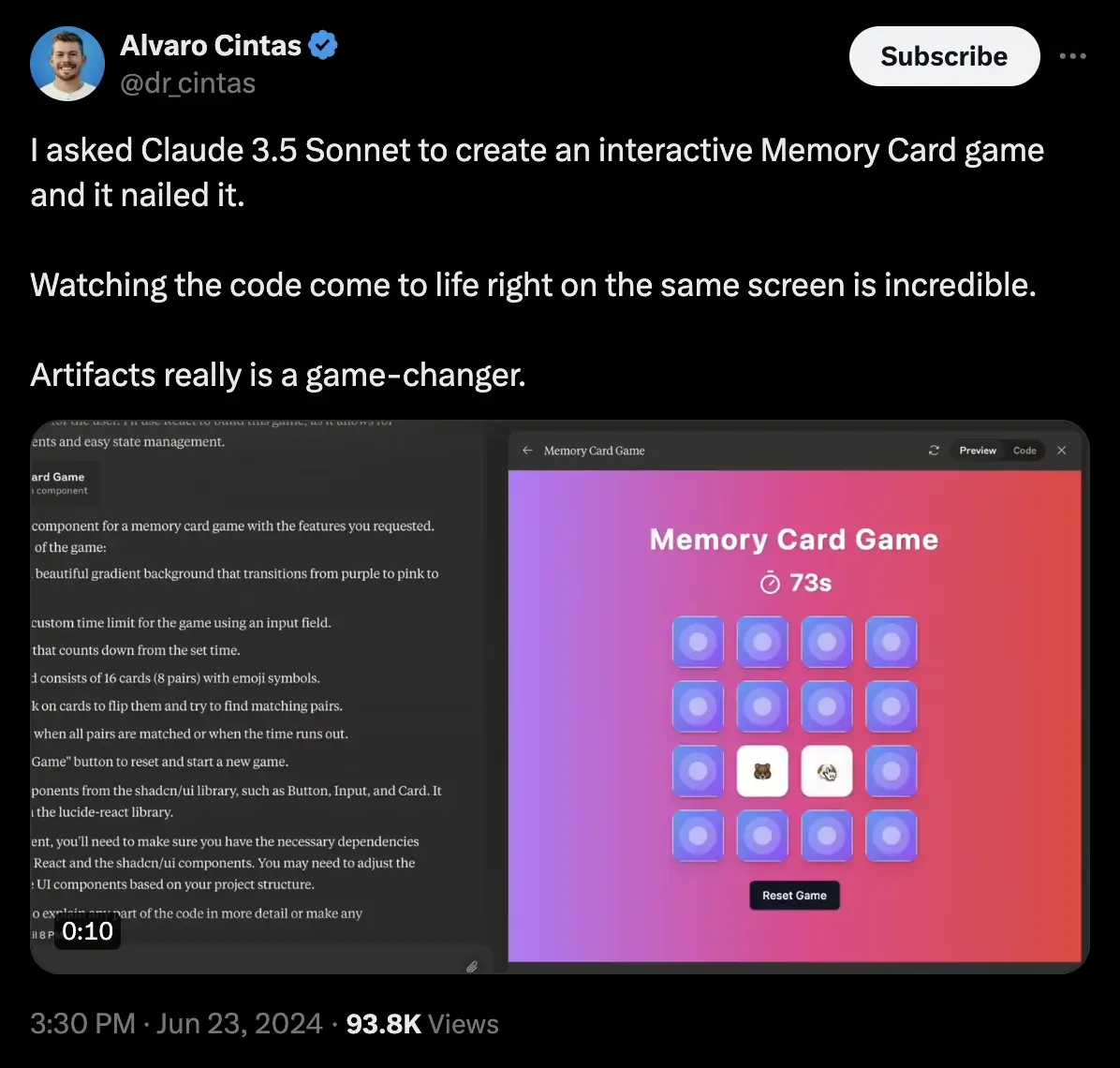

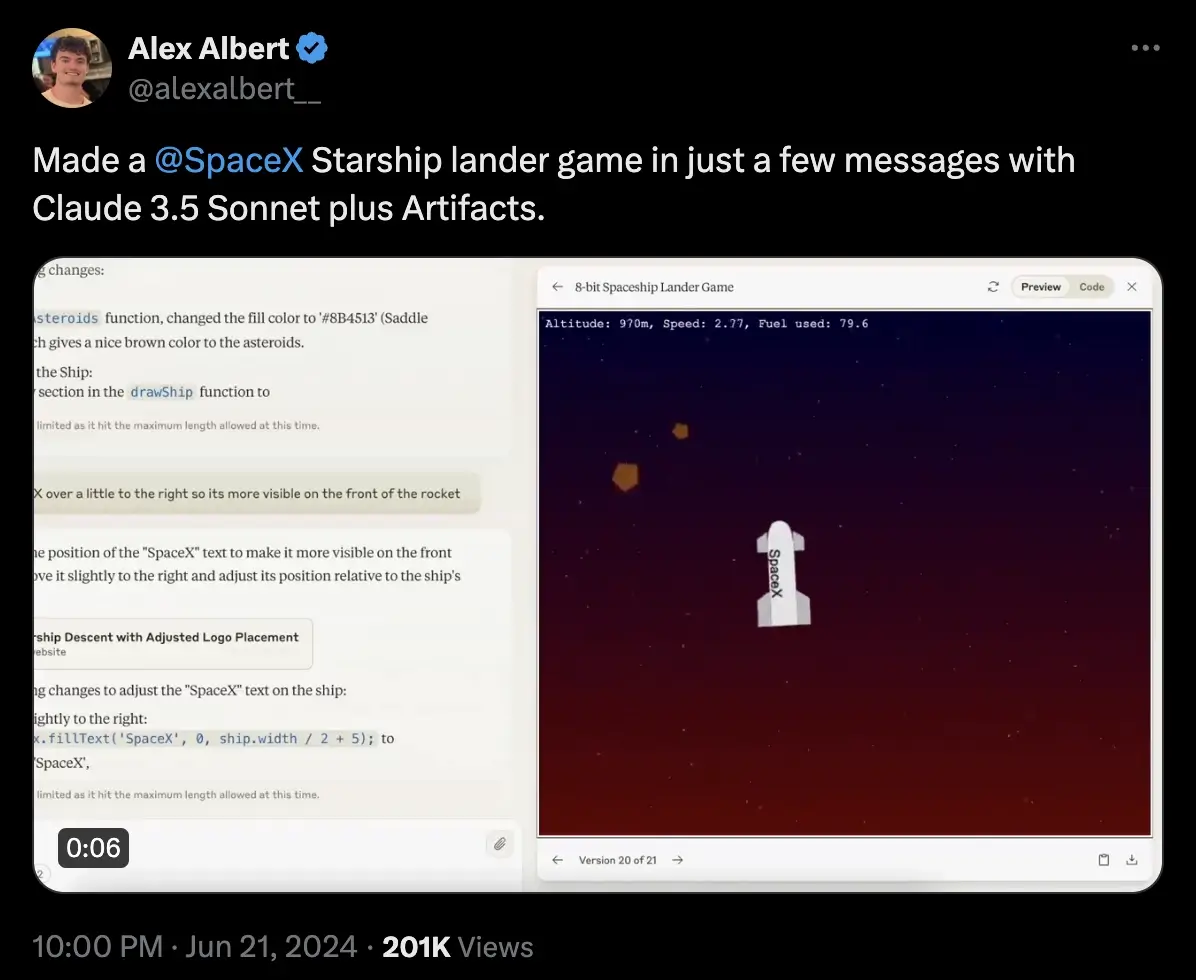

In addition to the new model, claude.ai receives a new feature called “Artifacts”. Any documents that Claude generates, such as text documents or code files, are displayed in a dedicated column next to the chat, and code is previewed in a sandbox-like environment.

It’s conceptually simple but a great UX. It creates a more seamless and natural flow when iterating on code files step by step. If you want your imagination piqued, take a look at these examples of folks putting the new Sonnet and Artifacts through its paces.

There are some response token size limits that larger artifacts may bump in to, but overall Artifacts is an important step for claude.ai - it marks its evolution from a conversational chatbot to a collaborative work environment - a tool to help you write documents and code, all wrapped in a streamlined UX.

With these improvements, Claude is positioning itself as a formidable competitor to ChatGPT. In fact, could “competitor” be underplaying it?

Is the King dead?

Claude’s update follows hot in the heels of the release of GPT-4o, OpenAI’s latest flagship model. GPT-4o is faster and cheaper than GPT-4 Turbo, with much improved multi-modal vision and audio capabilities, and replaces the now ancient (in AI terms) GPT-3.5 as ChatGPT’s default free-to-use model.

Whilst GPT-4o undoubtedly raises the bar in terms of its multi-modal capabilities (the announcement post features some great demo videos), for various - erm “reasons” - many of the voice and multi-modal features are not yet in the public’s hands. In my admittedly subjective evaluations of GPT-4o, I find it falls short of GPT-4 Turbo for the kind of tasks that I typically use it for.

This leaves me confused about the GPT family of models right now. Everyone was expecting GPT-5, but we got GPT-4o. It’s billed as their flagship model, but is clearly inferior to GPT-4 Turbo for some types of tasks. OpenAI has hinted that GPT-4o is the first in a family of new models. But if so, where is the rest of the family? And when will we be able to talk with that flirty Scarlett Johansson sound-alike?

For now, I think it’s fair to say the crown has fallen.

Conclusion

Instead, the crown currently and deservedly belongs to Anthropic. I like the options their line of models give me, and I’m excited for new Haiku and Opus models. I love that their free-to-use model is currently the best model on the market. And I love Artifacts and the direction claude.ai is heading. The boys and girls working at Anthropic are on a roll, and I’m a fan.

But only a fool would be writing OpenAI off. The question is not whether they will be able to raise the bar again, of course they will, but by how much so? Will it be to such an extent that they cement their position as market leaders for the next 12–18 months, as happened with GPT-4, or are we set for a closer run thing, with smaller and more incremental upgrades, with the gap between all the top LLM models narrowing?

There is one clear winner in all of this - us! The rising tide lifts everyone, and all users get to benefit from the increased choice and flexibility this competition brings.

But what excites me the most is that Sonnet is not some new bigger and more expensive model, it’s Claude’s medium-sized model, and remains the same cost. With improvements coming across the family of models, we will find that tomorrow’s smaller models will be as cpable as today’s larger models. If that trend continues, this brings prices (and energy demands) down, and opens access to advanced AI to many more potential users.