Setup a local, private AI coding assistant with Continue and Ollama

In our previous post, I walked you through the process of setting up Continue.dev with Groq and Llama 3, resulting in a best-in-class, fast and powerful (GPT-4 comparable) AI coding assistant in your VS Code environment.

But what if you’re looking for a solution that prioritises privacy, allows for offline use, and keeps everything running locally on your own machine. Enter our old friend Ollama.

Ollama is an open source toolset for running large language models, and makes the process of downloading the running open weight models pleasingly simple. And of course, it integrates very easily with Continue, providing you with all the same powerful features without relying on external APIs and LLM providers.

What’s more, Ollama actually unlocks a couple of Continue feature’s that the Groq and Llama3 combo didn’t - namely tab auto-completions and custom embedding models (which powers Continue’s RAG-like code search features).

We’re going to dive into all of that and more in the article. So, whether you’re concerned about privacy, need to work offline, or simply prefer the flexibility and control of a local setup, this guide will walk you through all the steps to get up and running with Continue and Ollama. Let’s get started!

Setting up Ollama

If you haven’t already done so, head over to ollama.com, click the big download button and follow the instructions. If you need to, read my post on running models locally with Ollama, which walks you through the installation and basic mechanics of Ollama.

To use Ollama with Continue, we’re going to need three different models to experiment with different features of Continue.

- For the main coding assistant chat features, we’ll use Llama 3, just like we did in the last post. But whilst Groq gives us access to the full 70b parameter version of Llama 3, unless you’ve got some beefy GPUs at your disposal, we’re going to have to settle for the smaller 8b parameter version.

- To benefit from tab autocompletion, we need a model that is specifically trained to handle what are known as Fill-the-Middle (FIM) predictions. Unlike normal text predictions which are adding tokens to the end of a sequence, with FIM you already have the beginning and the end and the model predicts what goes in the middle. Llama 3 does not support FIM, so instead well use StarCoder2 3b, a tiny model that should run pretty fast, and is trained specifically for coding tasks using different programming languages.

- We’ll also download an embeddings model. We’ll configure Continue to use

nomic-embed-textvia Ollama to generate embeddings, and we’ll have a play with Continue’s code retrieval features.

To install the models we need, open up your terminal and paste in the following lines:

ollama pull llama3:8b # 4.7 GB download

ollama pull starcoder2:3b # 1.7 GB download

ollama pull nomic-embed-text # 274 MB downloadIn total, this is going to download about 6.7 GB of model weights. A great opportunity to step away from the computer and make a cup of tea ☕.

Integrating Ollama with Continue

Once the models are downloaded, hop into VS Code and edit Continue’s config.json (hit ⇧+⌘+P and type continue config to quickly find it).

All we need to do is make the following three changes:

"models"- add a definition for our local Llama 3 model so it is available to use in Continue."tabAutocompleteModel"- set the tab autocomplete model to Starcoder 2."embeddingsProvider"- set the embedding provider to Nomic Embed Text.

See below:

{

"models": [

{

"title":"Local Llama 3 8b",

"provider":"ollama",

"model": "llama3:8b",

"completionOptions": {

"stop": ["<|eot_id|>"]

},

...

}

],

"tabAutocompleteModel": {

"title": "Starcoder 2 3b",

"provider": "ollama",

"model": "starcoder2:3b"

},

"embeddingsProvider": {

"title": "Nomic Embed Text",

"provider": "ollama",

"model": "nomic-embed-text"

},

...

}That’s all there is to it. Continue is now ready and waiting to assist your coding, locally powered by Ollama.

Using Continue with Ollama

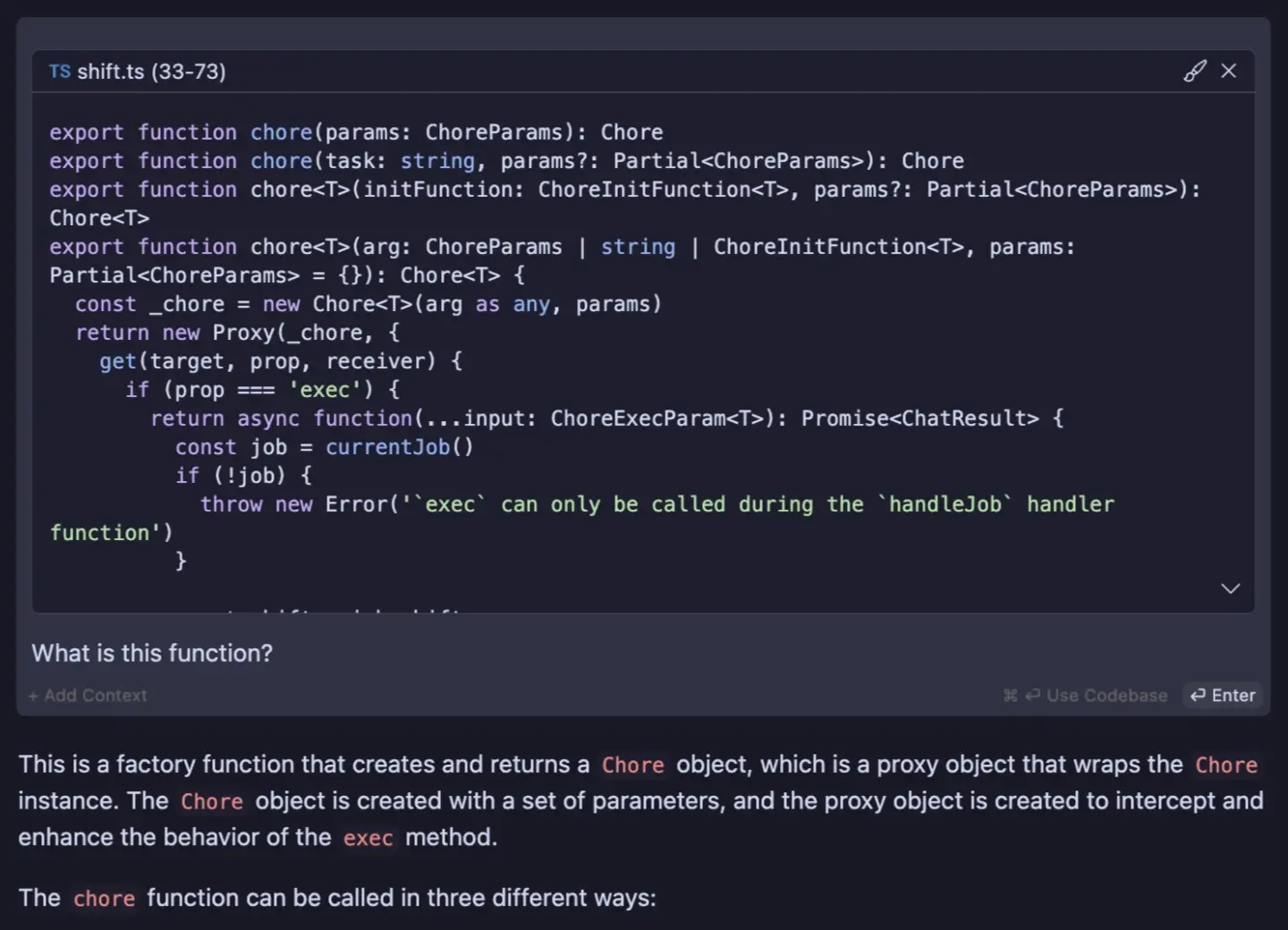

Now that everything is ready, we can try this out. Highlight some code, tap ⌘+L to bring up the side panel, and make sure the “Local Llama 3 8b” model is selected in the drop box at the bottom of the chat panel. You can chat with Llama 3, ask questions about your code, and use all the slash commands we covered in the previous post. Similarly, highlight some code, tap ⌘+I and instruct Llama to make changes to your code.

We covered all of this last time round, so if you need to get up to speed with the fundamentals of how to use Continue, read the post on using Continue of Groq and Llama 3.

Using Ollama with Continue gives us some new toys to play with, so let’s try them out.

Tab autocompletion

Tab autocomplete works like this: you are busy typing away writing some code; you momentarily pause typing to scratch your head; Continue takes the snippet of code you are working on, passes it to a Fill-the-Middle supporting LLM, and in the time it takes you to finish scratching and move your fingers back to the keyboard, it has had a go at completing the line or chunk of code that you’re working on; you can either ignore it, or if it’s a good prediction hit TAB ⇥ to accept the changes.

The promise is that Continue becomes this Jedi-like coding assistant that knows what you’re coding before you’ve even coded it. In practise though, your mileage may vary. I often switch AI tab autocomplete off - I find the UX slightly awkward as it works in tandem with native VS Code tab completions (which I use a lot), and I feel like the AI completions get in my way more than help me.

The Continue docs state they “will be greatly improving the experience over the next few releases”, suggesting the devs know it’s a bit half-baked currently. But it’s definitely worth trying out yourself - hopefully it will improve over time with future releases.

Embeddings and code search

I wrote a little bit about embeddings in my RAG explainer. In short, an embedding is a vector of numerical values that captures the semantic meaning of a chunk of text (or in this case, a chunk of code). Embeddings are an important part of how large language models work, but for our understanding here it’s just important to know that we can use embeddings to compare how semantically close different chunks of text/code are.

Continue generates embeddings out of the box using Transformers.js - a decent “it just works” solution that generates embeddings of size 384. nomic-embed-text via Ollama will generate embeddings of size 786, which in theory should improve the accuracy of Continue’s code retrieval features.

The Continue docs give some examples where you might use code retrieval. It’s achieved using the @codebase or @folder context providers and typing a prompt. For example:

@codebase how do I implement the `LLMAdapter` abstract class?The above generates embeddings from your codebase, searches for a LLMAdapter abstract class, and attempts to explain how to implement a concrete version of the class.

@src what events does the `Job` class emitThis example generates embeddings from the /src folder, searches for a Job class and explains what events it emits.

This is powerful stuff - it turns your AI coding assistant from a coding expert with general knowledge, to a coding expert with specific knowledge about what you’re working on.

Conclusion

By using Continue with Ollama, not only do you gain a fully local AI coding assistant, but you do so with complete control over your data and can ensure the privacy of your codebase. With Ollama’s support for tab autocompletion and custom embedding models, you can enhance Continue’s capabilities even further, making it an essential tool in your coding workflow.

But the real beauty of Continue lies in its flexibility. You don’t have to choose between the speed and power of Groq, or the privacy and offline capabilities of Ollama. Continue allows you to seamlessly switch between different models and setups, adapting to your specific needs and preferences. You can leverage the strengths of each approach, using Groq and Llama for lightning-fast coding assistance during your day-to-day work, and switching to Ollama when privacy is a top priority or when you need to work offline.

So, whether you’re a developer concerned about the confidentiality of your code, someone who frequently works in offline environments, or simply curious about the possibilities of local AI coding assistants, I encourage you to dive in and experiment with Continue and Ollama. The setup process is straightforward, and the potential benefits are immense. Have fun, and happy coding!